Edgeworth's 1920 paper "The Element of Chance in Competitive Examinations" mocks excessive reliance on "reasoning with the aid of the gens d'arme's hat -- from which as from a conjuror's, so much can be extracted." In this spirit Bruce Hansen's recent paper, "A Modern Gauss-Markov Theorem" argues that the econometrics slogan "OLS is BLUE" can be modified to "OLS is BUE", that is that we need not restrict attention to linear estimators, OLS can be considered minimum variance unbiased in a suitable class of more general regression models.

Since I'm thanked in the acknowledgments, I thought it might be prudent to make explicit a few reservations I have about Bruce's version of the GMT. Here then is my unexpurgated original comment on an earlier draft of the paper.

Bruce,

I hope that you won’t mind an unsolicited comment on your recent Gauss-Markov paper. I was wandering around somewhat aimlessly yesterday looking for recent work on model averaging for a refereeing task, and it attracted my attention. (Spoiler alert: I’ve always hated the GM Thm since it seemed to restrict attention to such a small class of estimators that its optimality claim was nearly vacuous.)

There is of course the (Rao?) result that ols is MVUE in the Gaussian linear model, but you want to say something much stronger, that it is MVUE in a much bigger class of models, but then the qualifiers become critical. I think that I understand where you are coming from, but I wonder whether you might be misleading the youth of econometrics by the way that you develop the argument. Your “for all F in calF_2” is quite strong. Of course median regression can be much more efficient than mean regression in iid error linear models and both are unbiased when the errors are symmetric. When errors are iid and not symmetric then median regression is biased, but only the intercept is biased, the slope parameters are still potentially much more efficient than the mean regression estimates would be. Here, I don’t mean to suggest that there is anything special about median regression — a plethora of other estimators would serve as well. There is merit, I concede, in the idea that “if you want to estimate a mean you should use the sample mean, etc” — I’ve heard this from Lars several times, but on the other side of the argument there is the infamous Bahadur-Savage result that the mean is never identified, in the sense that slight perturbations of the tails of the population distribution can make it bounce around arbitrarily. Of course, this depends upon what “slight” might mean. Your “for all F…” condition and unbiasedness for any linear contrast gets us back very close to requiring linearity, it seems.

The paper is fine, I just think that it might need a surgeon general’s warning of some sort.

Best

Roger

PS. The photo, taken recently at the Museum of the History of Paris (Carnavalet), depicts a metal, Napoleanic era, hat of the type that Edgeworth presumably had in mind.

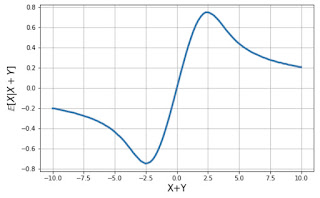

PPS (added March 12 2022) There are now two papers circulating one by Steve Portnoy and the other by Benedikt Potscher showing that the unbiasedness condition of Hansen admits only linear estimators, so my "very close" in the note above could be strengthened a bit. It also occurred to me after writing the original message that the 1757 proposal of Boscovich defines an estimator that can have superior (to OLS) asymptotic MSE performance and is asymptotically unbiased for iid error linear models. Details are given here.